Research

We focus on developing and applying advanced machine learning techniques to extract actionable insights from clinical routine data to to enable more precise diagnostic, prognostic, and therapeutic decisions. These are our main research fields:

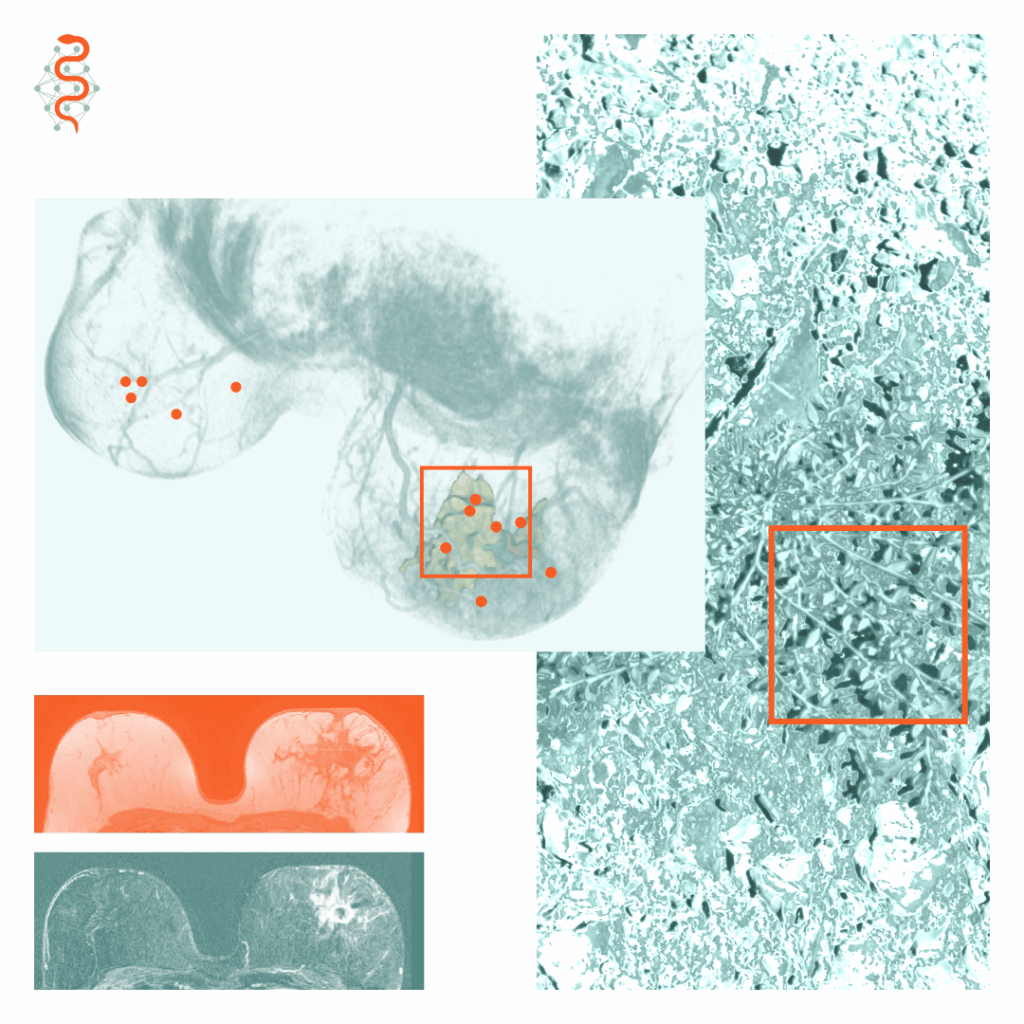

Multimodal AI

Diffusion Models and GANs

Large Language Models

Cancer Imaging

Musculoskelettal Imaging

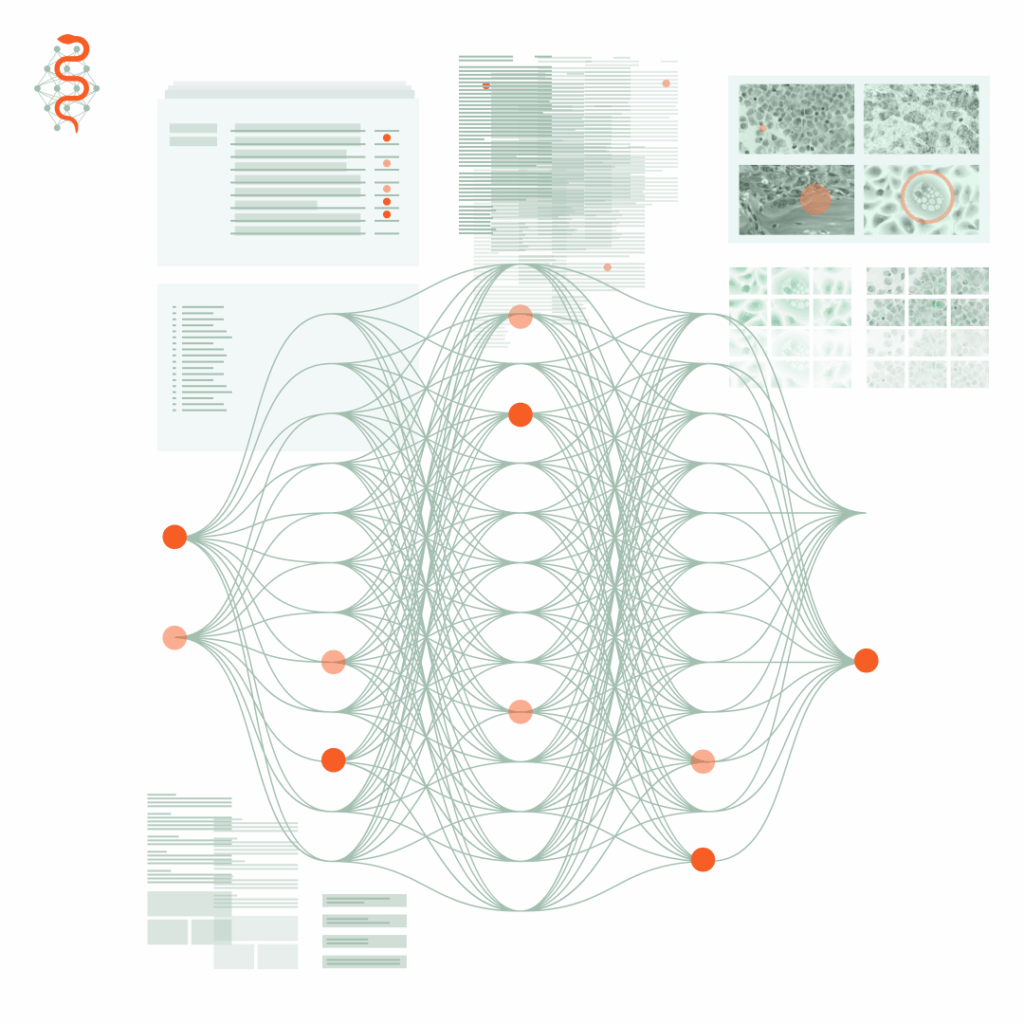

Multimodal AI

We develop multimodal fusion algorithms that combine information from multiple imaging modalities, patient histories, and multi-omics data. By integrating these diverse data sources, we aim to enable more precise diagnostic, prognostic, and therapeutic decisions.

Papers

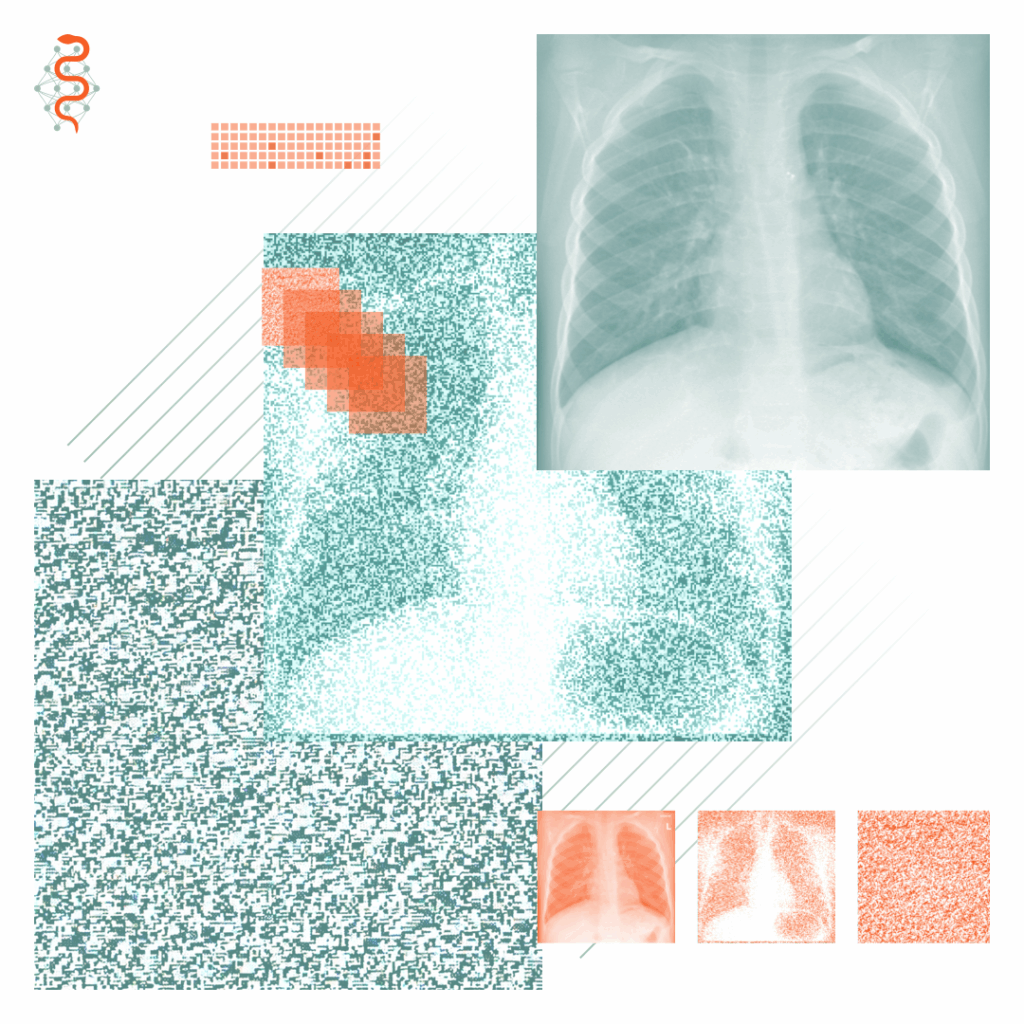

Diffusion Models and GANs

We investigate the potential of generative models, such as diffusion models and Generative Adversarial Networks (GANs), in the medical domain. We leverage these techniques to synthesize realistic medical images for data sharing or augmentation of training data. By pushing the boundaries of generative modeling, we seek to enhance the capabilities of AI systems in healthcare.

Papers

Large Language Models

We explore the capabilities of large language models in processing and analyzing medical data. By fine-tuning these models on domain-specific medical texts and electronic health records, we aim to extract valuable insights, generate clinical summaries, and support decision-making processes. Our research investigates the potential of language models in tasks such as medical question answering, clinical note summarization, and patient risk stratification.

Papers

- Comparative Analysis of Multimodal Large Language Model Performance on Clinical Vignette Questions. JAMA Network.

- Extracting structured information from unstructured histopathology reports using generative pre-trained transformer 4 (GPT-4). The Journal of Pathology

- Large language models should be used as scientific reasoning engines, not knowledge databases. Nat Med.

- Large language models streamline automated machine learning for clinical studies. Nat Commun

Cancer Imaging

One of our primary areas of expertise is precision oncology of solid tumors, including immunotherapy. We develop deep learning methods for automated tumor segmentation, characterization, and treatment response assessment from medical images. Our research aims to reduce inter-observer variability in cancer diagnosis and provide objective tools to assist pathologists and radiologists. We also work on identifying clinically relevant morphologic phenotypes and biomarkers associated with response to specific therapeutic agents.

Papers

- Using Machine Learning to Reduce the Need for Contrast Agents in Breast MRI through Synthetic Images. Radiology.

- Radiomic versus Convolutional Neural Networks Analysis for Classification of Contrast-enhancing Lesions at Multiparametric Breast MRI. Radiology

- Artificial intelligence in liver cancer — new tools for research and patient management. Nature Reviews Gastroenterology & Hepatology.

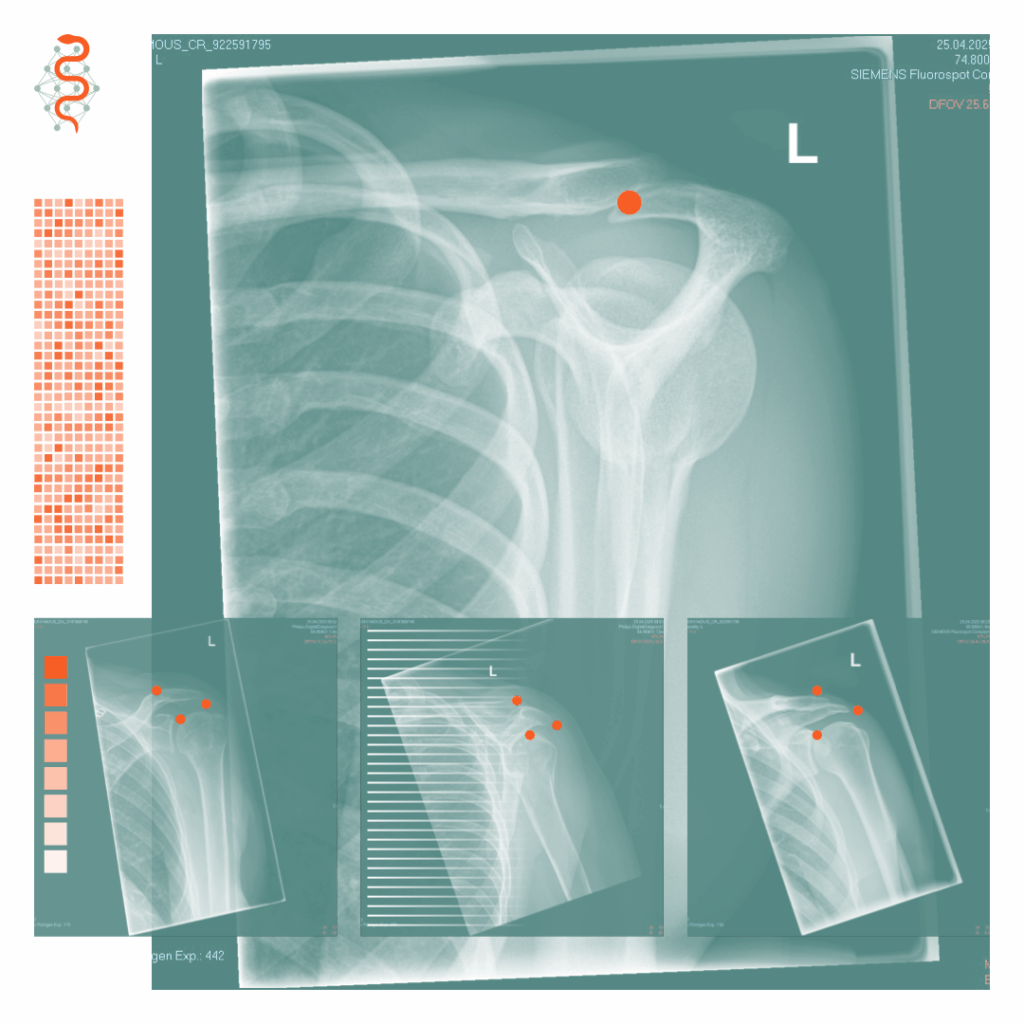

Musculoskelettal Imaging

Our lab also focuses on applying AI techniques to musculoskeletal imaging. We develop methods for automated segmentation and analysis of musculoskeletal structures, such as bones, cartilage, and tendons, from MRI data. Our research aims to improve the accuracy and efficiency of musculoskeletal disease diagnosis, monitor disease progression, and assess treatment outcomes. We collaborate closely with clinical experts to develop AI-aided diagnostic tools that can be integrated into clinical workflows.

Papers